diff --git a/README.md b/README.md

index e232bab90..8b6c78f5b 100644

--- a/README.md

+++ b/README.md

@@ -101,6 +101,7 @@ PaddleScience 是一个基于深度学习框架 PaddlePaddle 开发的科学计

| 流场高分辨率重构 | [2D 湍流流场重构](https://aistudio.baidu.com/projectdetail/4493261?contributionType=1) | 数据驱动 | cycleGAN | 监督学习 | [Train Data](https://paddle-org.bj.bcebos.com/paddlescience/datasets/tempoGAN/2d_train.mat)

[Eval Data](https://paddle-org.bj.bcebos.com/paddlescience/datasets/tempoGAN/2d_valid.mat) | [Paper](https://arxiv.org/abs/2007.15324)|

| 流场高分辨率重构 | [基于Voronoi嵌入辅助深度学习的稀疏传感器全局场重建](https://aistudio.baidu.com/projectdetail/5807904) | 数据驱动 | CNN | 监督学习 | [Data1](https://drive.google.com/drive/folders/1K7upSyHAIVtsyNAqe6P8TY1nS5WpxJ2c)

[Data2](https://drive.google.com/drive/folders/1pVW4epkeHkT2WHZB7Dym5IURcfOP4cXu)

[Data3](https://drive.google.com/drive/folders/1xIY_jIu-hNcRY-TTf4oYX1Xg4_fx8ZvD) | [Paper](https://arxiv.org/pdf/2202.11214.pdf) |

| 流场预测 | [Catheter](https://paddlescience-docs.readthedocs.io/zh-cn/latest/zh/examples/catheter/) | 数据驱动 | FNO | 监督学习 | [Data](https://aistudio.baidu.com/datasetdetail/291940) | [Paper](https://www.science.org/doi/pdf/10.1126/sciadv.adj1741) |

+| 流场预测 | [Catheter](https://paddlescience-docs.readthedocs.io/zh-cn/latest/zh/examples/confild/) | 数据驱动 | CONFILD | 监督学习 | [Data](https://aistudio.baidu.com/datasetdetail/9736790) | [Paper](https://doi.org/10.1038/s41467-024-54712-1) |

| 求解器耦合 | [CFD-GCN](https://paddlescience-docs.readthedocs.io/zh-cn/latest/zh/examples/cfdgcn) | 数据驱动 | GCN | 监督学习 | [Data](https://aistudio.baidu.com/aistudio/datasetdetail/184778)

[Mesh](https://paddle-org.bj.bcebos.com/paddlescience/datasets/CFDGCN/meshes.tar) | [Paper](https://arxiv.org/abs/2007.04439)|

| 受力分析 | [1D 欧拉梁变形](https://paddlescience-docs.readthedocs.io/zh-cn/latest/zh/examples/euler_beam) | 机理驱动 | MLP | 无监督学习 | - | - |

| 受力分析 | [2D 平板变形](https://paddlescience-docs.readthedocs.io/zh-cn/latest/zh/examples/biharmonic2d) | 机理驱动 | MLP | 无监督学习 | - | [Paper](https://arxiv.org/abs/2108.07243) |

diff --git a/docs/index.md b/docs/index.md

index c9e288ee5..ee3f0913b 100644

--- a/docs/index.md

+++ b/docs/index.md

@@ -124,6 +124,7 @@

| 流场高分辨率重构 | [2D 湍流流场重构](https://aistudio.baidu.com/projectdetail/4493261?contributionType=1) | 数据驱动 | cycleGAN | 监督学习 | [Train Data](https://paddle-org.bj.bcebos.com/paddlescience/datasets/tempoGAN/2d_train.mat)

[Eval Data](https://paddle-org.bj.bcebos.com/paddlescience/datasets/tempoGAN/2d_valid.mat) | [Paper](https://arxiv.org/abs/2007.15324)|

| 流场高分辨率重构 | [基于Voronoi嵌入辅助深度学习的稀疏传感器全局场重建](https://aistudio.baidu.com/projectdetail/5807904) | 数据驱动 | CNN | 监督学习 | [Data1](https://drive.google.com/drive/folders/1K7upSyHAIVtsyNAqe6P8TY1nS5WpxJ2c)

[Data2](https://drive.google.com/drive/folders/1pVW4epkeHkT2WHZB7Dym5IURcfOP4cXu)

[Data3](https://drive.google.com/drive/folders/1xIY_jIu-hNcRY-TTf4oYX1Xg4_fx8ZvD) | [Paper](https://arxiv.org/pdf/2202.11214.pdf) |

| 流场预测 | [Catheter](https://aistudio.baidu.com/projectdetail/5379212) | 数据驱动 | FNO | 监督学习 | [Data](https://aistudio.baidu.com/datasetdetail/291940) | [Paper](https://www.science.org/doi/pdf/10.1126/sciadv.adj1741) |

+ | 流场预测 | [CONFILD](https://aistudio.baidu.com/projectdetail/5379212) | 数据驱动 | CONFILD | 监督学习 | [Data](https://aistudio.baidu.com/datasetdetail/9736790) | [Paper](https://doi.org/10.1038/s41467-024-54712-1) |

| 求解器耦合 | [CFD-GCN](./zh/examples/cfdgcn.md) | 数据驱动 | GCN | 监督学习 | [Data](https://aistudio.baidu.com/aistudio/datasetdetail/184778)

[Mesh](https://paddle-org.bj.bcebos.com/paddlescience/datasets/CFDGCN/meshes.tar) | [Paper](https://arxiv.org/abs/2007.04439)|

| 受力分析 | [1D 欧拉梁变形](./zh/examples/euler_beam.md) | 机理驱动 | MLP | 无监督学习 | - | - |

| 受力分析 | [2D 平板变形](./zh/examples/biharmonic2d.md) | 机理驱动 | MLP | 无监督学习 | - | [Paper](https://arxiv.org/abs/2108.07243) |

diff --git a/docs/zh/examples/confild.md b/docs/zh/examples/confild.md

new file mode 100644

index 000000000..734da52db

--- /dev/null

+++ b/docs/zh/examples/confild.md

@@ -0,0 +1,103 @@

+# AI辅助的时空湍流生成:条件神经场潜在扩散模型(CoNFILD)

+

+Distributed under a Creative Commons Attribution license 4.0 (CC BY).

+

+## 1. 背景简介

+### 1.1 论文信息

+| 年份 | 期刊 | 作者 | 引用数 | 论文PDF与补充材料 |

+|----------------|---------------------|--------------------------------------------------------------------------------------------------|--------|----------------------------------------------------------------------------------------------------|

+| 2024年1月3日 | Nature Communications | Pan Du, Meet Hemant Parikh, Xiantao Fan, Xin-Yang Liu, Jian-Xun Wang | 15 | [论文链接](https://doi.org/10.1038/s41467-024-54712-1)

[代码仓库](https://github.com/jx-wang-s-group/CoNFILD) |

+

+### 1.2 作者介绍

+- **通讯作者**:Jian-Xun Wang(王建勋)

所属机构:美国圣母大学航空航天与机械工程系、康奈尔大学机械与航空航天工程系

研究方向:湍流建模、生成式AI、物理信息机器学习

+

+- **其他作者**:

Pan Du、Meet Hemant Parikh(共同一作):圣母大学博士生,研究方向为生成式模型与计算流体力学

Xiantao Fan、Xin-Yang Liu:圣母大学研究助理,负责数值模拟与数据生成

+

+### 1.3 模型&复现代码

+| 问题类型 | 在线运行 | 神经网络架构 | 评估指标 |

+|------------------------|----------------------------------------------------------------------------------------------------------------------------|------------------------|-----------------------|

+| 时空湍流生成 | [aistudio](https://aistudio.baidu.com/project/edit/9736790) | 条件神经场+潜在扩散模型 | MSE: 0.041(速度场) |

+

+=== "模型训练命令"

+```bash

+python confild.py mode=train

+```

+

+=== "预训练模型快速评估"

+

+``` sh

+python confild.py mode=eval

+```

+

+## 2. 问题定义

+### 2.1 研究背景

+湍流模拟在航空航天、海洋工程等领域至关重要,但传统方法如直接数值模拟(DNS)和大涡模拟(LES)计算成本高昂,难以应用于高雷诺数或实时场景。现有深度学习模型多基于确定性框架,难以捕捉湍流的混沌特性,且在复杂几何域中表现受限。

+

+### 2.2 核心挑战

+1. **高维数据**:三维时空湍流数据维度高达 \(O(10^9)\),传统生成模型内存需求巨大。

+2. **随机性建模**:需同时捕捉湍流的多尺度统计特性与瞬时动态。

+3. **几何适应性**:需支持不规则计算域与自适应网格。

+

+### 2.3 创新方法

+提出**条件神经场潜在扩散模型(CoNFILD)**,通过三阶段框架解决上述挑战:

+1. **神经场编码**:将高维流场压缩为低维潜在表示,压缩比达0.002%-0.017%。

+2. **潜在扩散**:在潜在空间进行概率扩散过程,学习湍流统计分布。

+3. **零样本条件生成**:结合贝叶斯推理,无需重新训练即可实现传感器重建、超分辨率等任务。

+

+

+*框架示意图:CNF编码器将流场映射到潜在空间,扩散模型生成新潜在样本,解码器重建物理场*

+

+## 3. 模型构建

+### 3.1 条件神经场(CNF)

+- **架构**:基于SIREN网络,采用正弦激活函数捕捉周期性特征。

+- **数学表示**:

+ $$

+ \mathscr{E}(\mathbf{X},\mathbf{L}) = \text{SIREN}(\mathbf{x}) + \text{FILM}(\mathbf{L})

+ $$

+ 其中FILM(Feature-wise Linear Modulation)通过潜在向量\(\mathbf{L}\)调节每层偏置。

+

+### 3.2 潜在扩散模型

+- **前向过程**:逐步添加高斯噪声,潜在表示\(\mathbf{z}_0 \rightarrow \mathbf{z}_T\)。

+- **逆向过程**:训练U-Net预测噪声,通过迭代去噪生成新样本:

+ $$

+ \mathbf{z}_{t-1} = \frac{1}{\sqrt{\alpha_t}} \left( \mathbf{z}_t - \frac{1-\alpha_t}{\sqrt{1-\bar{\alpha}_t}} \epsilon_\theta(\mathbf{z}_t, t) \right) + \sigma_t \epsilon

+ $$

+

+### 3.3 零样本条件生成

+- **贝叶斯后验采样**:基于稀疏观测\(\Psi\),通过梯度修正潜在空间采样:

+ $$

+ \nabla_{\mathbf{z}_t} \log p(\mathbf{z}_t|\Psi) \approx \nabla_{\mathbf{z}_t} \log p(\Psi|\mathbf{z}_t) + \nabla_{\mathbf{z}_t} \log p(\mathbf{z}_t)

+ $$

+

+## 4. 问题求解

+### 4.1 数据集准备

+数据文件说明如下:

+```

+data # CNF的训练数据集

+|

+|-- data.npy # 要拟合的数据

+|

+|-- coords.npy # 查询坐标

+```

+

+在加载数据之后,需要进行normalization,以便于训练。具体代码如下:

+```python linenums="39"

+example/confild/confild.py:59:135

+```

+

+### 4.2 CoNFiLD 模型

+CoNFiLD 模型基于贝叶斯后验采样,将稀疏传感器测量数据作为条件输入。通过训练好的无条件扩散模型作为先验,在扩散后验采样过程中,考虑测量噪声引入的不确定性。利用状态到观测映射,根据条件向量与流场的关系,通过调整无条件得分函数,引导生成与传感器数据一致的全时空流场实现重构,并且能提供重构的不确定性估计。代码如下:

+

+```python linenums="39"

+ppsci/arch/confild.py:304:420

+```

+为了在计算时,准确快速地访问具体变量的值,我们在这里指定网络模型的输入变量名是 ["confild_x", "latent_z"],输出变量名是 ["confild_output"],这些命名与后续代码保持一致。

+

+4.3 模型训练、评估

+完成上述设置之后,只需要将上述实例化的对象按照文档进行组合,然后启动训练、评估。

+```python linenums="39"

+examples/confild/confild.py:218:503

+```

+

+## 5. 实验结果

+

diff --git a/examples/confild/conf/confild_case1.yaml b/examples/confild/conf/confild_case1.yaml

new file mode 100644

index 000000000..0f5bbf6bf

--- /dev/null

+++ b/examples/confild/conf/confild_case1.yaml

@@ -0,0 +1,119 @@

+defaults:

+ - ppsci_default

+ - TRAIN: train_default

+ - TRAIN/ema: ema_default

+ - TRAIN/swa: swa_default

+ - EVAL: eval_default

+ - INFER: infer_default

+ - hydra/job/config/override_dirname/exclude_keys: exclude_keys_default

+ - _self_

+

+hydra:

+ run:

+ dir: outputs_confild_case1/${now:%Y-%m-%d}/${now:%H-%M-%S}/${hydra.job.override_dirname}

+ job:

+ name: ${mode}

+ chdir: false

+ callbacks:

+ init_callback:

+ _target_: ppsci.utils.callbacks.InitCallback

+ sweep:

+ dir: ${hydra.run.dir}

+ subdir: ./

+

+mode: infer

+seed: 2025

+output_dir: ${hydra:run.dir}

+log_freq: 20

+

+TRAIN:

+ batch_size: 64

+ test_batch_size: 256

+ epochs: 9800

+ mutil_GPU: 1

+ lr:

+ cnf: 1.e-4

+ latents: 1.e-5

+

+EVAL:

+ confild_pretrained_model_path: ./outputs_confild_case1/confild_case1/epoch_99999

+ latent_pretrained_model_path: ./outputs_confild_case1/latent_case1/epoch_99999

+

+CONFILD:

+ input_keys: ["confild_x", "latent_z"]

+ output_keys: ["confild_output"]

+ num_hidden_layers: 10

+ out_features: 3

+ hidden_features: 128

+ in_coord_features: 2

+ in_latent_features: 128

+

+Latent:

+ input_keys: ["latent_x"]

+ output_keys: ["latent_z"]

+ N_samples: 16000

+ lumped: True

+ N_features: 128

+ dims: 2

+

+INFER:

+ Latent:

+ INFER:

+ pretrained_model_path: null

+ export_path: ./inference/latent_case1

+ pdmodel_path: ${INFER.Latent.INFER.export_path}.pdmodel

+ pdiparams_path: ${INFER.Latent.INFER.export_path}.pdiparams

+ onnx_path: ${INFER.Latent.INFER.export_path}.onnx

+ device: gpu

+ engine: native

+ precision: fp32

+ ir_optim: true

+ min_subgraph_size: 5

+ gpu_mem: 2000

+ gpu_id: 0

+ max_batch_size: 1024

+ num_cpu_threads: 10

+ log_freq: 20

+ Confild:

+ INFER:

+ pretrained_model_path: null

+ export_path: ./inference/confild_case1

+ pdmodel_path: ${INFER.Confild.INFER.export_path}.pdmodel

+ pdiparams_path: ${INFER.Confild.INFER.export_path}.pdiparams

+ onnx_path: ${INFER.Confild.INFER.export_path}.onnx

+ device: gpu

+ engine: native

+ precision: fp32

+ ir_optim: true

+ min_subgraph_size: 5

+ gpu_mem: 2000

+ gpu_id: 0

+ max_batch_size: 1024

+ num_cpu_threads: 10

+ coord_shape: [918, 2]

+ latents_shape: [1, 128]

+ log_freq: 20

+ batch_size: 64

+

+Uncondiction_INFER:

+ batch_size : 16

+ test_batch_size : 16

+ time_length : 128

+ latent_length : 128

+ image_size : 128

+ num_channels: 128

+ num_res_blocks: 2

+ num_heads: 4

+ num_head_channels: 64

+ attention_resolutions: "32,16,8"

+ channel_mult: null

+ steps: 1000

+ noise_schedule: "cosine"

+

+Data:

+ data_path: data/Case1/case1_data.npy

+ coor_path: data/Case1/case1_coords.npy

+ normalizer:

+ method: "-11"

+ dim: 0

+ load_data_fn: load_elbow_flow

diff --git a/examples/confild/conf/confild_case2.yaml b/examples/confild/conf/confild_case2.yaml

new file mode 100644

index 000000000..ab6208c09

--- /dev/null

+++ b/examples/confild/conf/confild_case2.yaml

@@ -0,0 +1,103 @@

+defaults:

+ - ppsci_default

+ - TRAIN: train_default

+ - TRAIN/ema: ema_default

+ - TRAIN/swa: swa_default

+ - EVAL: eval_default

+ - INFER: infer_default

+ - hydra/job/config/override_dirname/exclude_keys: exclude_keys_default

+ - _self_

+

+hydra:

+ run:

+ dir: ./outputs_confild_case2

+ job:

+ name: ${mode}

+ chdir: false

+ callbacks:

+ init_callback:

+ _target_: ppsci.utils.callbacks.InitCallback

+ sweep:

+ dir: ${hydra.run.dir}

+ subdir: ./

+

+mode: infer

+seed: 2025

+output_dir: ${hydra:run.dir}

+log_freq: 20

+

+TRAIN:

+ batch_size: 10

+ test_batch_size: 10

+ epochs: 44500

+ mutil_GPU: 1

+ lr:

+ cnf: 1.e-4

+ latents: 1.e-5

+

+EVAL:

+ confild_pretrained_model_path: ./outputs_confild_case2/confild_case2/epoch_99999

+ latent_pretrained_model_path: ./outputs_confild_case2/latent_case2/epoch_99999

+

+CONFILD:

+ input_keys: ["confild_x", "latent_z"]

+ output_keys: ["confild_output"]

+ num_hidden_layers: 10

+ out_features: 4

+ hidden_features: 256

+ in_coord_features: 2

+ in_latent_features: 256

+

+Latent:

+ input_keys: ["latent_x"]

+ output_keys: ["latent_z"]

+ N_samples: 1200

+ lumped: False

+ N_features: 256

+ dims: 2

+

+INFER:

+ Latent:

+ INFER:

+ pretrained_model_path: null

+ export_path: ./inference/latent_case2

+ pdmodel_path: ${INFER.Latent.INFER.export_path}.pdmodel

+ pdiparams_path: ${INFER.Latent.INFER.export_path}.pdiparams

+ onnx_path: ${INFER.Latent.INFER.export_path}.onnx

+ device: gpu

+ engine: native

+ precision: fp32

+ ir_optim: true

+ min_subgraph_size: 5

+ gpu_mem: 2000

+ gpu_id: 0

+ max_batch_size: 1024

+ num_cpu_threads: 10

+ log_freq: 20

+ Confild:

+ INFER:

+ pretrained_model_path: null

+ export_path: ./inference/confild_case2

+ pdmodel_path: ${INFER.Confild.INFER.export_path}.pdmodel

+ pdiparams_path: ${INFER.Confild.INFER.export_path}.pdiparams

+ onnx_path: ${INFER.Confild.INFER.export_path}.onnx

+ device: gpu

+ engine: native

+ precision: fp32

+ ir_optim: true

+ min_subgraph_size: 5

+ gpu_mem: 2000

+ gpu_id: 0

+ max_batch_size: 1024

+ num_cpu_threads: 10

+ coord_shape: [400, 100, 2]

+ latents_shape: [1, 1, 256]

+ log_freq: 20

+ batch_size: 40

+

+Data:

+ data_path: /home/aistudio/work/extracted/data/Case2/data.npy

+ normalizer:

+ method: "-11"

+ dim: 0

+ load_data_fn: load_channel_flow

diff --git a/examples/confild/conf/confild_case3.yaml b/examples/confild/conf/confild_case3.yaml

new file mode 100644

index 000000000..140ced107

--- /dev/null

+++ b/examples/confild/conf/confild_case3.yaml

@@ -0,0 +1,104 @@

+defaults:

+ - ppsci_default

+ - TRAIN: train_default

+ - TRAIN/ema: ema_default

+ - TRAIN/swa: swa_default

+ - EVAL: eval_default

+ - INFER: infer_default

+ - hydra/job/config/override_dirname/exclude_keys: exclude_keys_default

+ - _self_

+

+hydra:

+ run:

+ dir: ./outputs_confild_case3

+ job:

+ name: ${mode}

+ chdir: false

+ callbacks:

+ init_callback:

+ _target_: ppsci.utils.callbacks.InitCallback

+ sweep:

+ dir: ${hydra.run.dir}

+ subdir: ./

+

+mode: infer

+seed: 2025

+output_dir: ${hydra:run.dir}

+log_freq: 20

+

+TRAIN:

+ batch_size: 100

+ test_batch_size: 100

+ epochs: 4800

+ mutil_GPU: 2

+ lr:

+ cnf: 1.e-4

+ latents: 1.e-5

+

+EVAL:

+ confild_pretrained_model_path: ./outputs_confild_case3/confild_case3/epoch_99999

+ latent_pretrained_model_path: ./outputs_confild_case3/latent_case3/epoch_99999

+

+CONFILD:

+ input_keys: ["confild_x", "latent_z"]

+ output_keys: ["confild_output"]

+ num_hidden_layers: 117

+ out_features: 2

+ hidden_features: 256

+ in_coord_features: 2

+ in_latent_features: 256

+

+Latent:

+ input_keys: ["latent_x"]

+ output_keys: ["latent_z"]

+ N_samples: 2880

+ lumped: True

+ N_features: 256

+ dims: 2

+

+INFER:

+ Latent:

+ INFER:

+ pretrained_model_path: null

+ export_path: ./inference/latent_case3

+ pdmodel_path: ${INFER.Latent.INFER.export_path}.pdmodel

+ pdiparams_path: ${INFER.Latent.INFER.export_path}.pdiparams

+ onnx_path: ${INFER.Latent.INFER.export_path}.onnx

+ device: gpu

+ engine: native

+ precision: fp32

+ ir_optim: true

+ min_subgraph_size: 5

+ gpu_mem: 2000

+ gpu_id: 0

+ max_batch_size: 1024

+ num_cpu_threads: 10

+ log_freq: 20

+ Confild:

+ INFER:

+ pretrained_model_path: null

+ export_path: ./inference/confild_case3

+ pdmodel_path: ${INFER.Confild.INFER.export_path}.pdmodel

+ pdiparams_path: ${INFER.Confild.INFER.export_path}.pdiparams

+ onnx_path: ${INFER.Confild.INFER.export_path}.onnx

+ device: gpu

+ engine: native

+ precision: fp32

+ ir_optim: true

+ min_subgraph_size: 5

+ gpu_mem: 2000

+ gpu_id: 0

+ max_batch_size: 1024

+ num_cpu_threads: 10

+ coord_shape: [10884, 2]

+ latents_shape: [1, 256]

+ log_freq: 20

+ batch_size: 100

+

+Data:

+ data_path: /home/aistudio/work/extracted/data/Case3/data.npy

+ coor_path: /home/aistudio/work/extracted/data/Case3/coords.npy

+ normalizer:

+ method: "-11"

+ dim: 0

+ load_data_fn: load_periodic_hill_flow

diff --git a/examples/confild/conf/confild_case4.yaml b/examples/confild/conf/confild_case4.yaml

new file mode 100644

index 000000000..42dd6470d

--- /dev/null

+++ b/examples/confild/conf/confild_case4.yaml

@@ -0,0 +1,104 @@

+defaults:

+ - ppsci_default

+ - TRAIN: train_default

+ - TRAIN/ema: ema_default

+ - TRAIN/swa: swa_default

+ - EVAL: eval_default

+ - INFER: infer_default

+ - hydra/job/config/override_dirname/exclude_keys: exclude_keys_default

+ - _self_

+

+hydra:

+ run:

+ dir: ./outputs_confild_case4

+ job:

+ name: ${mode}

+ chdir: false

+ callbacks:

+ init_callback:

+ _target_: ppsci.utils.callbacks.InitCallback

+ sweep:

+ dir: ${hydra.run.dir}

+ subdir: ./

+

+mode: infer

+seed: 2025

+output_dir: ${hydra:run.dir}

+log_freq: 20

+

+TRAIN:

+ batch_size: 4

+ test_batch_size: 4

+ epochs: 20000

+ mutil_GPU: 2

+ lr:

+ cnf: 1.e-4

+ latents: 1.e-5

+

+EVAL:

+ confild_pretrained_model_path: ./outputs_confild_case4/confild_case4/epoch_99999

+ latent_pretrained_model_path: ./outputs_confild_case4/latent_case4/epoch_99999

+

+CONFILD:

+ input_keys: ["confild_x", "latent_z"]

+ output_keys: ["confild_output"]

+ num_hidden_layers: 15

+ out_features: 3

+ hidden_features: 384

+ in_coord_features: 3

+ in_latent_features: 384

+

+Latent:

+ input_keys: ["latent_x"]

+ output_keys: ["latent_z"]

+ N_samples: 1200

+ lumped: True

+ N_features: 384

+ dims: 3

+

+INFER:

+ Latent:

+ INFER:

+ pretrained_model_path: null

+ export_path: ./inference/latent_case4

+ pdmodel_path: ${INFER.Latent.INFER.export_path}.pdmodel

+ pdiparams_path: ${INFER.Latent.INFER.export_path}.pdiparams

+ onnx_path: ${INFER.Latent.INFER.export_path}.onnx

+ device: gpu

+ engine: native

+ precision: fp32

+ ir_optim: true

+ min_subgraph_size: 5

+ gpu_mem: 2000

+ gpu_id: 0

+ max_batch_size: 1024

+ num_cpu_threads: 10

+ log_freq: 20

+ Confild:

+ INFER:

+ pretrained_model_path: null

+ export_path: ./inference/confild_case4

+ pdmodel_path: ${INFER.Confild.INFER.export_path}.pdmodel

+ pdiparams_path: ${INFER.Confild.INFER.export_path}.pdiparams

+ onnx_path: ${INFER.Confild.INFER.export_path}.onnx

+ device: gpu

+ engine: native

+ precision: fp32

+ ir_optim: true

+ min_subgraph_size: 5

+ gpu_mem: 2000

+ gpu_id: 0

+ max_batch_size: 1024

+ num_cpu_threads: 10

+ coord_shape: [58483, 3]

+ latents_shape: [1, 384]

+ log_freq: 20

+ batch_size: 4

+

+Data:

+ data_path: /home/aistudio/work/extracted/data/Case4/data.npy

+ coor_path: /home/aistudio/work/extracted/data/Case4/coords.npy

+ normalizer:

+ method: "-11"

+ dim: 0

+ load_data_fn: load_3d_flow

diff --git a/examples/confild/conf/un_confild_case1.yaml b/examples/confild/conf/un_confild_case1.yaml

new file mode 100644

index 000000000..027eef920

--- /dev/null

+++ b/examples/confild/conf/un_confild_case1.yaml

@@ -0,0 +1,89 @@

+defaults:

+ - ppsci_default

+ - TRAIN: train_default

+ - TRAIN/ema: ema_default

+ - TRAIN/swa: swa_default

+ - EVAL: eval_default

+ - INFER: infer_default

+ - hydra/job/config/override_dirname/exclude_keys: exclude_keys_default

+ - _self_

+

+hydra:

+ run:

+ dir: outputs_confild_case1/${now:%Y-%m-%d}/${now:%H-%M-%S}/${hydra.job.override_dirname}

+ job:

+ name: ${mode}

+ chdir: false

+ callbacks:

+ init_callback:

+ _target_: ppsci.utils.callbacks.InitCallback

+ sweep:

+ dir: ${hydra.run.dir}

+ subdir: ./

+

+mode: eval

+seed: 2025

+output_dir: ${hydra:run.dir}

+log_freq: 20

+save_path: ${output_dir}/result.npy

+

+TRAIN:

+ batch_size : 16

+ test_batch_size : 16

+ ema_rate: "0.9999"

+ lr_anneal_steps: 10000

+ lr : 5.e-5

+ weight_decay: 0.0

+ final_lr: 1.e-5

+ microbatch: -1

+ max_steps: 10000

+

+EVAL:

+ mutil_GPU: 1

+ lr : 5.e-5

+ ema_rate: "0.9999"

+ log_interval: 1000

+ save_interval: 10000

+ lr_anneal_steps: 0

+ time_length : 128

+ latent_length : 128

+ test_batch_size: 16

+

+UNET:

+ image_size : 128

+ num_channels: 128

+ num_res_blocks: 2

+ num_heads: 4

+ num_head_channels: 64

+ attention_resolutions: "32,16,8"

+ channel_mult: null

+ ema_path: /home/aistudio/work/data/Case1/diffusion/ema.pdparams

+

+Diff:

+ steps: 1000

+ noise_schedule: "cosine"

+

+CNF:

+ mutil_GPU: 1

+ data_path: /home/aistudio/work/data/Case1/data.npy

+ coor_path: /home/aistudio/work/data/Case1/coords.npy

+ load_data_fn: load_elbow_flow

+ normalizer:

+ method: "-11"

+ dim: 0

+ CONFILD:

+ input_keys: ["confild_x", "latent_z"]

+ output_keys: ["confild_output"]

+ num_hidden_layers: 10

+ out_features: 3

+ hidden_features: 128

+ in_coord_features: 2

+ in_latent_features: 128

+ normalizer_params_path: /home/aistudio/work/data/Case1/cnf/normalizer_params.pdparams

+ model_path: /home/aistudio/work/PaddleScience/examples/confild/cnf_model.pdparams

+

+DATA:

+ max_val: 1.0

+ min_val: -1.0

+ train_data: "/home/aistudio/work/data/Case1/train_data.npy"

+ valid_data: "/home/aistudio/work/data/Case1/valid_data.npy"

diff --git a/examples/confild/conf/un_confild_case2.yaml b/examples/confild/conf/un_confild_case2.yaml

new file mode 100644

index 000000000..4356abaf1

--- /dev/null

+++ b/examples/confild/conf/un_confild_case2.yaml

@@ -0,0 +1,88 @@

+defaults:

+ - ppsci_default

+ - TRAIN: train_default

+ - TRAIN/ema: ema_default

+ - TRAIN/swa: swa_default

+ - EVAL: eval_default

+ - INFER: infer_default

+ - hydra/job/config/override_dirname/exclude_keys: exclude_keys_default

+ - _self_

+

+hydra:

+ run:

+ dir: ./outputs_un_confild_case2

+ job:

+ name: ${mode}

+ chdir: false

+ callbacks:

+ init_callback:

+ _target_: ppsci.utils.callbacks.InitCallback

+ sweep:

+ dir: ${hydra.run.dir}

+ subdir: ./

+

+mode: eval

+seed: 2025

+output_dir: ${hydra:run.dir}

+log_freq: 20

+save_path: ${output_dir}/result.npy

+

+TRAIN:

+ batch_size : 16

+ test_batch_size : 16

+ ema_rate: "0.9999"

+ lr_anneal_steps: 10000

+ lr : 5.e-5

+ weight_decay: 0.0

+ final_lr: 1.e-5

+ microbatch: -1

+ max_steps: 10000

+

+EVAL:

+ mutil_GPU: 1

+ lr : 5.e-5

+ ema_rate: "0.9999"

+ log_interval: 1000

+ save_interval: 10000

+ lr_anneal_steps: 0

+ time_length : 256

+ latent_length : 256

+ test_batch_size: 16

+

+UNET:

+ image_size : 256

+ num_channels: 128

+ num_res_blocks: 2

+ num_heads: 4

+ num_head_channels: 64

+ attention_resolutions: "32,16,8"

+ channel_mult: null

+ ema_path: /home/aistudio/work/data/Case2/diffusion/ema.pdparams

+

+Diff:

+ steps: 1000

+ noise_schedule: "cosine"

+

+CNF:

+ mutil_GPU: 1

+ data_path: /home/aistudio/work/data/Case2/data.npy

+ load_data_fn: load_channel_flow

+ normalizer:

+ method: "-11"

+ dim: 0

+ CONFILD:

+ input_keys: ["confild_x", "latent_z"]

+ output_keys: ["confild_output"]

+ num_hidden_layers: 10

+ out_features: 4

+ hidden_features: 256

+ in_coord_features: 2

+ in_latent_features: 256

+ normalizer_params_path: /home/aistudio/work/data/Case2/cnf/normalizer_params.pdparams

+ model_path: /home/aistudio/work/PaddleScience/examples/confild/cnf_model.pdparams

+

+DATA:

+ max_val: 1.0

+ min_val: -1.0

+ train_data: "/home/aistudio/work/data/Case2/train_data.npy"

+ valid_data: "/home/aistudio/work/data/Case2/valid_data.npy"

\ No newline at end of file

diff --git a/examples/confild/conf/un_confild_case3.yaml b/examples/confild/conf/un_confild_case3.yaml

new file mode 100644

index 000000000..75628cdae

--- /dev/null

+++ b/examples/confild/conf/un_confild_case3.yaml

@@ -0,0 +1,89 @@

+defaults:

+ - ppsci_default

+ - TRAIN: train_default

+ - TRAIN/ema: ema_default

+ - TRAIN/swa: swa_default

+ - EVAL: eval_default

+ - INFER: infer_default

+ - hydra/job/config/override_dirname/exclude_keys: exclude_keys_default

+ - _self_

+

+hydra:

+ run:

+ dir: ./outputs_un_confild_case3

+ job:

+ name: ${mode}

+ chdir: false

+ callbacks:

+ init_callback:

+ _target_: ppsci.utils.callbacks.InitCallback

+ sweep:

+ dir: ${hydra.run.dir}

+ subdir: ./

+

+mode: eval

+seed: 2025

+output_dir: ${hydra:run.dir}

+log_freq: 20

+save_path: ${output_dir}/result.npy

+

+TRAIN:

+ batch_size : 16

+ test_batch_size : 16

+ ema_rate: "0.9999"

+ lr_anneal_steps: 10000

+ lr : 5.e-5

+ weight_decay: 0.0

+ final_lr: 1.e-5

+ microbatch: -1

+ max_steps: 10000

+

+EVAL:

+ mutil_GPU: 2

+ lr : 5.e-5

+ ema_rate: "0.9999"

+ log_interval: 1000

+ save_interval: 10000

+ lr_anneal_steps: 0

+ time_length : 256

+ latent_length : 256

+ test_batch_size: 16

+

+UNET:

+ image_size : 256

+ num_channels: 128

+ num_res_blocks: 2

+ num_heads: 4

+ num_head_channels: 64

+ attention_resolutions: "32,16,8"

+ channel_mult: null

+ ema_path: /home/aistudio/work/data/Case3/diffusion/ema.pdparams

+

+Diff:

+ steps: 1000

+ noise_schedule: "cosine"

+

+CNF:

+ mutil_GPU: 1

+ data_path: /home/aistudio/work/data/Case3/data.npy

+ coor_path: /home/aistudio/work/data/Case3/coords.npy

+ normalizer:

+ method: "-11"

+ dim: 0

+ load_data_fn: load_periodic_hill_flow

+ CONFILD:

+ input_keys: ["confild_x", "latent_z"]

+ output_keys: ["confild_output"]

+ num_hidden_layers: 117

+ out_features: 2

+ hidden_features: 256

+ in_coord_features: 2

+ in_latent_features: 256

+ normalizer_params_path: /home/aistudio/work/data/Case3/cnf/normalizer_params.pdparams

+ model_path: /home/aistudio/work/PaddleScience/examples/confild/cnf_model.pdparams

+

+DATA:

+ min_val: -1.0

+ max_val: 1.0

+ train_data: "/home/aistudio/work/data/Case3/train_data.npy"

+ valid_data: "/home/aistudio/work/data/Case3/valid_data.npy"

diff --git a/examples/confild/conf/un_confild_case4.yaml b/examples/confild/conf/un_confild_case4.yaml

new file mode 100644

index 000000000..f3113f37c

--- /dev/null

+++ b/examples/confild/conf/un_confild_case4.yaml

@@ -0,0 +1,90 @@

+defaults:

+ - ppsci_default

+ - TRAIN: train_default

+ - TRAIN/ema: ema_default

+ - TRAIN/swa: swa_default

+ - EVAL: eval_default

+ - INFER: infer_default

+ - hydra/job/config/override_dirname/exclude_keys: exclude_keys_default

+ - _self_

+

+hydra:

+ run:

+ dir: ./outputs_un_confild_case4

+ job:

+ name: ${mode}

+ chdir: false

+ callbacks:

+ init_callback:

+ _target_: ppsci.utils.callbacks.InitCallback

+ sweep:

+ dir: ${hydra.run.dir}

+ subdir: ./

+

+mode: eval

+seed: 2025

+output_dir: ${hydra:run.dir}

+log_freq: 20

+save_path: ${output_dir}/result.npy

+

+TRAIN:

+ batch_size : 8

+ test_batch_size : 8

+ ema_rate: "0.9999"

+ lr_anneal_steps: 10000

+ lr : 5.e-5

+ weight_decay: 0.0

+ final_lr: 1.e-5

+ microbatch: -1

+ max_steps: 10000

+

+EVAL:

+ mutil_GPU: 2

+ lr : 5.e-5

+ ema_rate: "0.9999"

+ log_interval: 1000

+ save_interval: 10000

+ lr_anneal_steps: 0

+ time_length : 256

+ latent_length : 256

+ test_batch_size: 8

+

+UNET:

+

+ image_size : 384

+ num_channels: 128

+ num_res_blocks: 2

+ num_heads: 4

+ num_head_channels: 64

+ attention_resolutions: "32,16,8"

+ channel_mult: "1, 1, 2, 2, 4, 4"

+ ema_path: /home/aistudio/work/data/Case4/diffusion/ema.pdparams

+

+Diff:

+ steps: 1000

+ noise_schedule: "cosine"

+

+CNF:

+ mutil_GPU: 1

+ data_path: /home/aistudio/work/data/Case4/data.npy

+ coor_path: /home/aistudio/work/data/Case4/coords.npy

+ normalizer:

+ method: "-11"

+ dim: 0

+ load_data_fn: load_3d_flow

+ CONFILD:

+ input_keys: ["confild_x", "latent_z"]

+ output_keys: ["confild_output"]

+ num_hidden_layers: 15

+ out_features: 3

+ hidden_features: 384

+ in_coord_features: 3

+ in_latent_features: 384

+ normalizer_params_path: /home/aistudio/work/data/Case4/cnf/normalizer_params.pdparams

+ model_path: /home/aistudio/work/PaddleScience/examples/confild/cnf_model.pdparams

+

+DATA:

+ min_val: -1.0

+ max_val: 1.0

+ train_data: "/home/aistudio/work/data/Case4/train_data.npy"

+ valid_data: "/home/aistudio/work/data/Case4/valid_data.npy"

diff --git a/examples/confild/confild.py b/examples/confild/confild.py

new file mode 100644

index 000000000..bb8896336

--- /dev/null

+++ b/examples/confild/confild.py

@@ -0,0 +1,631 @@

+# Copyright (c) 2025 PaddlePaddle Authors. All Rights Reserved.

+

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+

+# http://www.apache.org/licenses/LICENSE-2.0

+

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import hydra

+import matplotlib.pyplot as plt

+import numpy as np

+import paddle

+from omegaconf import DictConfig

+from paddle.distributed import fleet

+from paddle.io import DataLoader, DistributedBatchSampler

+

+import ppsci

+from ppsci.arch import LatentContainer, SIRENAutodecoder_film

+from ppsci.utils import logger

+

+

+def load_elbow_flow(path):

+ return np.load(f"{path}")[1:]

+

+

+def load_channel_flow(

+ path,

+ t_start=0,

+ t_end=1200,

+ t_every=1,

+):

+ return np.load(f"{path}")[t_start:t_end:t_every]

+

+

+def load_periodic_hill_flow(path):

+ data = np.load(f"{path}")

+ return data

+

+

+def load_3d_flow(path):

+ data = np.load(f"{path}")

+ return data

+

+

+def rMAE(prediction, target, dims=(1, 2)):

+ return paddle.abs(x=prediction - target).mean(axis=dims) / paddle.abs(

+ x=target

+ ).mean(axis=dims)

+

+

+class Normalizer_ts(object):

+ def __init__(self, params=[], method="-11", dim=None):

+ self.params = params

+ self.method = method

+ self.dim = dim

+

+ def fit_normalize(self, data):

+ assert type(data) == paddle.Tensor

+ if len(self.params) == 0:

+ if self.method == "-11" or self.method == "01":

+ if self.dim is None:

+ self.params = paddle.max(x=data), paddle.min(x=data)

+ else:

+ self.params = (

+ paddle.max(keepdim=True, x=data, axis=self.dim),

+ paddle.argmax(keepdim=True, x=data, axis=self.dim),

+ )[0], (

+ paddle.min(keepdim=True, x=data, axis=self.dim),

+ paddle.argmin(keepdim=True, x=data, axis=self.dim),

+ )[

+ 0

+ ]

+ elif self.method == "ms":

+ if self.dim is None:

+ self.params = paddle.mean(x=data, axis=self.dim), paddle.std(

+ x=data, axis=self.dim

+ )

+ else:

+ self.params = paddle.mean(

+ x=data, axis=self.dim, keepdim=True

+ ), paddle.std(x=data, axis=self.dim, keepdim=True)

+ elif self.method == "none":

+ self.params = None

+ return self.fnormalize(data, self.params, self.method)

+

+ def normalize(self, new_data):

+ if not new_data.place == self.params[0].place:

+ self.params = self.params[0], self.params[1]

+ return self.fnormalize(new_data, self.params, self.method)

+

+ def denormalize(self, new_data_norm):

+ if not new_data_norm.place == self.params[0].place:

+ self.params = self.params[0], self.params[1]

+ return self.fdenormalize(new_data_norm, self.params, self.method)

+

+ def get_params(self):

+ if self.method == "ms":

+ print("returning mean and std")

+ elif self.method == "01":

+ print("returning max and min")

+ elif self.method == "-11":

+ print("returning max and min")

+ elif self.method == "none":

+ print("do nothing")

+ return self.params

+

+ @staticmethod

+ def fnormalize(data, params, method):

+ if method == "-11":

+ return (data - params[1]) / (params[0] - params[1]) * 2 - 1

+ elif method == "01":

+ return (data - params[1]) / (params[0] - params[1])

+ elif method == "ms":

+ return (data - params[0]) / params[1]

+ elif method == "none":

+ return data

+

+ @staticmethod

+ def fdenormalize(data_norm, params, method):

+ if method == "-11":

+ return (data_norm + 1) / 2 * (params[0] - params[1]) + params[1]

+ elif method == "01":

+ return data_norm * (params[0] - params[1]) + params[1]

+ elif method == "ms":

+ return data_norm * params[1] + params[0]

+ elif method == "none":

+ return data_norm

+

+

+class BasicSet(paddle.io.Dataset):

+ def __init__(self, fois, coord, global_indices=None, extra_siren_in=None) -> None:

+ super().__init__()

+ self.fois = fois.numpy()

+ self.total_samples = tuple(fois.shape)[0]

+ self.coords = coord.numpy()

+ self.global_indices = (

+ global_indices

+ if global_indices is not None

+ else np.arange(self.total_samples)

+ )

+

+ def __len__(self):

+ return self.total_samples

+

+ def __getitem__(self, idx):

+ global_idx = self.global_indices[idx]

+ if hasattr(self, "extra_in"):

+ extra_id = idx % tuple(self.fois.shape)[1]

+ idb = idx // tuple(self.fois.shape)[1]

+ return (

+ (self.coords, self.extra_in[extra_id]),

+ self.fois[idb, extra_id],

+ global_idx,

+ )

+ else:

+ return self.coords, self.fois[idx], global_idx

+

+

+def getdata(cfg):

+ if cfg.Data.load_data_fn == "load_3d_flow":

+ fois = load_3d_flow(cfg.Data.data_path)

+ elif cfg.Data.load_data_fn == "load_elbow_flow":

+ fois = load_elbow_flow(cfg.Data.data_path)

+ elif cfg.Data.load_data_fn == "load_channel_flow":

+ fois = load_channel_flow(cfg.Data.data_path)

+ elif cfg.Data.load_data_fn == "load_periodic_hill_flow":

+ fois = load_periodic_hill_flow(cfg.Data.data_path)

+ else:

+ fois = np.load(cfg.Data.data_path)

+

+ spatio_shape = fois.shape[1:-1]

+ spatio_axis = list(

+ range(fois.ndim if isinstance(fois, np.ndarray) else fois.dim())

+ )[1:-1]

+

+ ###### read data - coordinate ######

+ if cfg.Data.get("coor_path", None) is None:

+ coord = [np.linspace(0, 1, i) for i in spatio_shape]

+ coord = np.stack(np.meshgrid(*coord, indexing="ij"), axis=-1)

+ else:

+ coord = np.load(cfg.Data.coor_path)

+ coord = coord.astype("float32")

+ fois = fois.astype("float32")

+

+ ###### convert to tensor ######

+ fois = paddle.to_tensor(fois) if not isinstance(fois, paddle.Tensor) else fois

+ coord = paddle.to_tensor(coord) if not isinstance(coord, paddle.Tensor) else coord

+ N_samples = fois.shape[0]

+

+ ###### normalizer ######

+ in_normalizer = Normalizer_ts(**cfg.Data.normalizer)

+ in_normalizer.fit_normalize(

+ coord if cfg.Latent.lumped else coord.flatten(0, cfg.Latent.dims - 1)

+ )

+ out_normalizer = Normalizer_ts(**cfg.Data.normalizer)

+ out_normalizer.fit_normalize(

+ fois if cfg.Latent.lumped else fois.flatten(0, cfg.Latent.dims)

+ )

+ normed_coords = in_normalizer.normalize(coord) # 训练集就是测试集

+ normed_fois = out_normalizer.normalize(fois)

+

+ return normed_coords, normed_fois, N_samples, spatio_axis, out_normalizer

+

+

+def signal_train(

+ cfg,

+ normed_coords,

+ train_normed_fois,

+ test_normed_fois,

+ spatio_axis,

+ out_normalizer,

+ train_indices,

+ test_indices,

+):

+ cnf_model = SIRENAutodecoder_film(**cfg.CONFILD)

+ latents_model = LatentContainer(**cfg.Latent)

+

+ train_dataset = BasicSet(train_normed_fois, normed_coords, train_indices)

+ test_dataset = BasicSet(test_normed_fois, normed_coords, test_indices)

+

+ criterion = paddle.nn.MSELoss()

+

+ # set loader

+ train_loader = DataLoader(

+ dataset=train_dataset, batch_size=cfg.TRAIN.batch_size, shuffle=True

+ )

+ test_loader = DataLoader(

+ dataset=test_dataset, batch_size=cfg.TRAIN.test_batch_size, shuffle=False

+ )

+ # set optimizer

+ cnf_optimizer = ppsci.optimizer.Adam(cfg.TRAIN.lr.cnf, weight_decay=0.0)(cnf_model)

+ latents_optimizer = ppsci.optimizer.Adam(cfg.TRAIN.lr.latents, weight_decay=0.0)(

+ latents_model

+ )

+ losses = []

+

+ for epoch in range(cfg.TRAIN.epochs):

+ cnf_model.train()

+ latents_model.train()

+ train_loss = []

+ for batch_coords, batch_fois, idx in train_loader:

+ idx = {"latent_x": idx}

+ batch_latent = latents_model(idx)

+ if isinstance(batch_coords, list):

+ batch_coords = [coord for coord in batch_coords]

+ data = {

+ "confild_x": batch_coords,

+ "latent_z": batch_latent["latent_z"],

+ }

+ batch_output = cnf_model(data)

+ loss = criterion(batch_output["confild_output"], batch_fois)

+

+ cnf_optimizer.clear_grad(set_to_zero=False)

+ latents_optimizer.clear_grad(set_to_zero=False)

+

+ loss.backward()

+

+ cnf_optimizer.step()

+ latents_optimizer.step()

+ train_loss.append(loss)

+ epoch_loss = paddle.stack(x=train_loss).mean().item()

+ losses.append(epoch_loss)

+

+ print("epoch {}, train loss {}".format(epoch + 1, epoch_loss))

+ if epoch % 100 == 0:

+ test_error = []

+ cnf_model.eval()

+ latents_model.eval()

+ with paddle.no_grad():

+ for test_coords, test_fois, idx in test_loader:

+ if isinstance(test_coords, list):

+ test_coords = [coord for coord in test_coords]

+ prediction = out_normalizer.denormalize(

+ cnf_model(

+ {

+ "confild_x": test_coords,

+ "latent_z": latents_model({"latent_x": idx})[

+ "latent_z"

+ ],

+ }

+ )["confild_output"]

+ )

+ target = out_normalizer.denormalize(test_fois)

+ error = rMAE(prediction=prediction, target=target, dims=spatio_axis)

+ test_error.append(error)

+ test_error = paddle.concat(x=test_error).mean(axis=0)

+ print("test MAE: ", test_error)

+ import os

+

+ if not os.path.exists(cfg.output_dir):

+ os.makedirs(cfg.output_dir, exist_ok=True)

+

+ paddle.save(

+ cnf_model.state_dict(), f"{cfg.output_dir}/cnf_model_{epoch}.pdparams"

+ )

+ paddle.save(

+ latents_model.state_dict(),

+ f"{cfg.output_dir}/latents_model_{epoch}.pdparams",

+ )

+

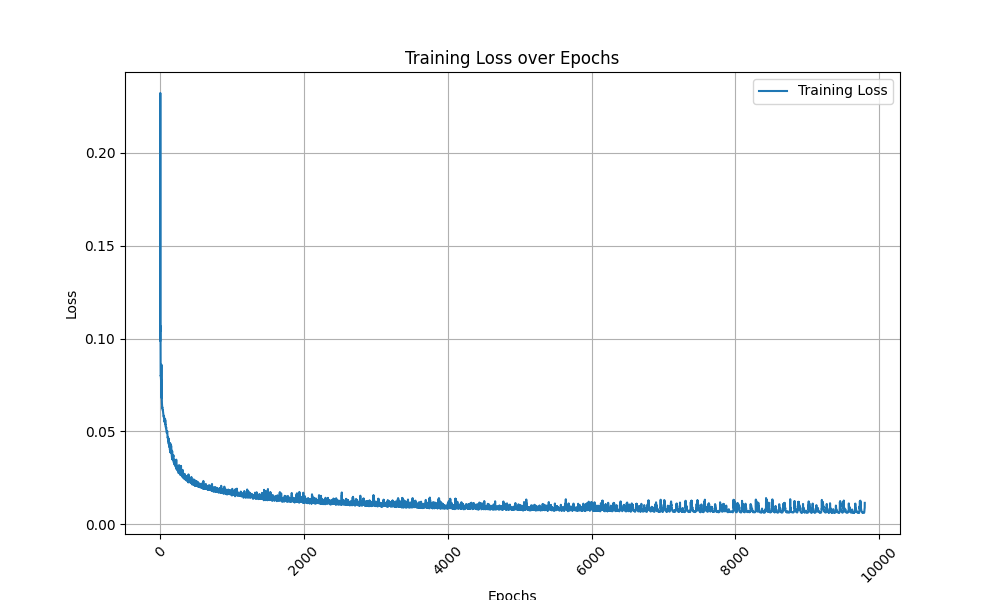

+ plt.figure(figsize=(10, 6))

+ plt.plot(range(cfg.TRAIN.epochs), losses, label="Training Loss")

+

+ plt.title("Training Loss over Epochs")

+ plt.xlabel("Epochs")

+ plt.xticks(rotation=45)

+ plt.ylabel("Loss")

+

+ plt.legend()

+

+ plt.grid(True)

+

+ plt.savefig("case.png")

+

+

+def mutil_train(

+ cfg,

+ normed_coords,

+ train_normed_fois,

+ test_normed_fois,

+ spatio_axis,

+ out_normalizer,

+ train_indices,

+ test_indices,

+):

+ fleet.init(is_collective=True)

+ cnf_model = SIRENAutodecoder_film(**cfg.CONFILD)

+ cnf_model = fleet.distributed_model(cnf_model)

+ latents_model = LatentContainer(**cfg.Latent)

+ latents_model = fleet.distributed_model(latents_model)

+

+ # set optimizer

+ cnf_optimizer = ppsci.optimizer.Adam(cfg.TRAIN.lr.cnf, weight_decay=0.0)(cnf_model)

+ cnf_optimizer = fleet.distributed_optimizer(cnf_optimizer)

+ latents_optimizer = ppsci.optimizer.Adam(cfg.TRAIN.lr.latents, weight_decay=0.0)(

+ latents_model

+ )

+ latents_optimizer = fleet.distributed_optimizer(latents_optimizer)

+

+ train_dataset = BasicSet(train_normed_fois, normed_coords, train_indices)

+ test_dataset = BasicSet(test_normed_fois, normed_coords, test_indices)

+

+ train_sampler = DistributedBatchSampler(

+ train_dataset, cfg.TRAIN.batch_size, shuffle=True, drop_last=True

+ )

+ train_loader = DataLoader(

+ train_dataset,

+ batch_sampler=train_sampler,

+ num_workers=cfg.TRAIN.mutil_GPU,

+ use_shared_memory=False,

+ )

+ test_sampler = DistributedBatchSampler(

+ test_dataset, cfg.TRAIN.test_batch_size, drop_last=True

+ )

+ test_loader = DataLoader(

+ test_dataset,

+ batch_sampler=test_sampler,

+ num_workers=cfg.TRAIN.mutil_GPU,

+ use_shared_memory=False,

+ )

+

+ criterion = paddle.nn.MSELoss()

+ losses = []

+

+ for epoch in range(cfg.TRAIN.epochs):

+ cnf_model.train()

+ latents_model.train()

+ train_loss = []

+ for batch_coords, batch_fois, idx in train_loader:

+ idx = {"latent_x": idx}

+ batch_latent = latents_model(idx)

+ if isinstance(batch_coords, list):

+ batch_coords = [coord for coord in batch_coords]

+ data = {

+ "confild_x": batch_coords,

+ "latent_z": batch_latent["latent_z"],

+ }

+ batch_output = cnf_model(data)

+ loss = criterion(batch_output["confild_output"], batch_fois)

+

+ cnf_optimizer.clear_grad(set_to_zero=False)

+ latents_optimizer.clear_grad(set_to_zero=False)

+

+ loss.backward()

+

+ cnf_optimizer.step()

+ latents_optimizer.step()

+ train_loss.append(loss)

+ epoch_loss = paddle.stack(x=train_loss).mean().item()

+ losses.append(epoch_loss)

+ print("epoch {}, train loss {}".format(epoch + 1, epoch_loss))

+ if epoch % 100 == 0:

+ test_error = []

+ cnf_model.eval()

+ latents_model.eval()

+ with paddle.no_grad():

+ for test_coords, test_fois, idx in test_loader:

+ if isinstance(test_coords, list):

+ test_coords = [coord for coord in test_coords]

+ prediction = out_normalizer.denormalize(

+ cnf_model(

+ {

+ "confild_x": test_coords,

+ "latent_z": latents_model({"latent_x": idx})[

+ "latent_z"

+ ],

+ }

+ )["confild_output"]

+ )

+ target = out_normalizer.denormalize(test_fois)

+ error = rMAE(prediction=prediction, target=target, dims=spatio_axis)

+ test_error.append(error)

+ test_error = paddle.concat(x=test_error).mean(axis=0)

+ print("test MAE: ", test_error)

+

+ import os

+

+ if not os.path.exists(cfg.output_dir):

+ os.makedirs(cfg.output_dir, exist_ok=True)

+

+ paddle.save(

+ cnf_model.state_dict(), f"{cfg.output_dir}/cnf_model_{epoch}.pdparams"

+ )

+ paddle.save(

+ latents_model.state_dict(),

+ f"{cfg.output_dir}/latents_model_{epoch}.pdparams",

+ )

+

+ plt.figure(figsize=(10, 6))

+ plt.plot(range(cfg.TRAIN.epochs), losses, label="Training Loss")

+

+ plt.title("Training Loss over Epochs")

+ plt.xlabel("Epochs")

+ plt.xticks(rotation=45)

+ plt.ylabel("Loss")

+

+ plt.legend()

+

+ plt.grid(True)

+

+ plt.savefig("case.png")

+

+

+def train(cfg):

+ world_size = cfg.TRAIN.mutil_GPU

+ normed_coords, normed_fois, N_samples, spatio_axis, out_normalizer = getdata(cfg)

+ train_normed_fois = normed_fois

+ test_normed_fois = normed_fois

+ train_indices = list(range(N_samples))

+ test_indices = list(range(N_samples))

+

+ if world_size > 1:

+ import paddle.distributed as dist

+

+ dist.init_parallel_env()

+ mutil_train(

+ cfg,

+ normed_coords,

+ train_normed_fois,

+ test_normed_fois,

+ spatio_axis,

+ out_normalizer,

+ train_indices,

+ test_indices,

+ )

+ else:

+ signal_train(

+ cfg,

+ normed_coords,

+ train_normed_fois,

+ test_normed_fois,

+ spatio_axis,

+ out_normalizer,

+ train_indices,

+ test_indices,

+ )

+

+

+def evaluate(cfg: DictConfig):

+ # set data

+ # normed_coords, normed_fois, N_samples, spatio_axis, out_normalizer = getdata(cfg)

+ normed_coords, normed_fois, _, spatio_axis, out_normalizer = getdata(cfg)

+ # [918,2]

+ # [16000,918,3]

+ print(normed_coords.shape)

+ print(normed_fois.shape)

+ t_std = cfg.get("t_std", 15698) # Use configurable parameter, default to 15698

+ normed_fois = normed_fois[t_std:]

+ # exit()

+ if len(normed_coords.shape) + 1 == len(normed_fois.shape):

+ normed_coords = paddle.tile(

+ normed_coords, [normed_fois.shape[0]] + [1] * len(normed_coords.shape)

+ )

+

+ idx = paddle.to_tensor(np.arange(normed_fois.shape[0]), dtype="int64")

+ # set model

+ confild = SIRENAutodecoder_film(**cfg.CONFILD)

+ latent = LatentContainer(**cfg.Latent)

+ logger.info(

+ "Loading pretrained model from {}".format(

+ cfg.EVAL.confild_pretrained_model_path

+ )

+ )

+ ppsci.utils.save_load.load_pretrain(

+ confild,

+ cfg.EVAL.confild_pretrained_model_path,

+ )

+ logger.info(

+ "Loading pretrained model from {}".format(cfg.EVAL.latent_pretrained_model_path)

+ )

+ ppsci.utils.save_load.load_pretrain(

+ latent,

+ cfg.EVAL.latent_pretrained_model_path,

+ )

+ latent_test_pred = latent({"latent_x": idx})

+ y_test_pred = []

+ for i in range(normed_coords.shape[0]):

+ ouput = confild(

+ {

+ "confild_x": normed_coords[i],

+ "latent_z": latent_test_pred["latent_z"][i],

+ }

+ )["confild_output"].numpy()

+ y_test_pred.append(ouput)

+

+ y_test_pred = paddle.to_tensor(np.array(y_test_pred))

+ y_test_pred = out_normalizer.denormalize(y_test_pred)

+ y_test = out_normalizer.denormalize(normed_fois)

+

+ def calc_err():

+ var_name = ["u", "v", "p"]

+ for i, var in enumerate(var_name):

+ u_true_mean = paddle.mean(y_test[:, :, i], axis=0)

+ u_label_mean = paddle.mean(y_test_pred[:, :, i], axis=0)

+ u_true_std = paddle.std(y_test[:, :, i], axis=0)

+ u_label_std = paddle.std(y_test_pred[:, :, i], axis=0)

+ avg_discrepancy = paddle.mean(paddle.abs(u_true_mean - u_label_mean))

+ std_discrepancy = paddle.mean(paddle.abs(u_true_std - u_label_std))

+ print(f"Average Discrepancy [{var}] Value: {avg_discrepancy:.3f}")

+ print(f"Standard Deviation of [{var}] Magnitude: {std_discrepancy:.4f}")

+

+ calc_err()

+

+

+def inference(cfg):

+ normed_coords, normed_fois, _, _, _ = getdata(cfg)

+ if len(normed_coords.shape) + 1 == len(normed_fois.shape):

+ normed_coords = paddle.tile(

+ normed_coords, [normed_fois.shape[0]] + [1] * len(normed_coords.shape)

+ )

+

+ fois_len = normed_fois.shape[0]

+

+ idxs = np.arange(fois_len)

+ from deploy import python_infer

+

+ latent_predictor = python_infer.GeneralPredictor(cfg.INFER.Latent)

+ input_dict = {"latent_x": idxs}

+ output_dict = latent_predictor.predict(input_dict, cfg.INFER.batch_size)

+

+ cnf_predictor = python_infer.GeneralPredictor(cfg.INFER.Confild)

+ input_dict = {

+ "confild_x": normed_coords.numpy(),

+ "latent_z": list(output_dict.values())[0],

+ }

+ output_dict = cnf_predictor.predict(input_dict, cfg.INFER.batch_size)

+

+ logger.info("Result is {}".format(output_dict["fetch_name_0"]))

+

+

+def export(cfg):

+ # set model

+ cnf_model = SIRENAutodecoder_film(**cfg.CONFILD)

+ latent_model = LatentContainer(**cfg.Latent)

+ # initialize solver

+ latnet_solver = ppsci.solver.Solver(

+ latent_model,

+ pretrained_model_path=cfg.INFER.Latent.INFER.pretrained_model_path,

+ )

+ cnf_solver = ppsci.solver.Solver(

+ cnf_model,

+ pretrained_model_path=cfg.INFER.Confild.INFER.pretrained_model_path,

+ )

+ # export model

+ from paddle.static import InputSpec

+

+ input_spec = [

+ {key: InputSpec([None], "int64", name=key) for key in latent_model.input_keys},

+ ]

+ cnf_input_spec = [

+ {

+ cnf_model.input_keys[0]: InputSpec(

+ [None] + list(cfg.INFER.Confild.INFER.coord_shape),

+ "float32",

+ name=cnf_model.input_keys[0],

+ ),

+ cnf_model.input_keys[1]: InputSpec(

+ [None] + list(cfg.INFER.Confild.INFER.latents_shape),

+ "float32",

+ name=cnf_model.input_keys[1],

+ ),

+ }

+ ]

+ cnf_solver.export(cnf_input_spec, cfg.INFER.Confild.INFER.export_path)

+ latnet_solver.export(input_spec, cfg.INFER.Latent.INFER.export_path)

+

+

+@hydra.main(version_base=None, config_path="./conf", config_name="confild_case1.yaml")

+def main(cfg: DictConfig):

+ if cfg.mode == "train":

+ train(cfg)

+ elif cfg.mode == "eval":

+ evaluate(cfg)

+ elif cfg.mode == "infer":

+ inference(cfg)

+ elif cfg.mode == "export":

+ export(cfg)

+ else:

+ raise ValueError(

+ f"cfg.mode should in ['train', 'eval', 'infer', 'export'], but got '{cfg.mode}'"

+ )

+

+

+if __name__ == "__main__":

+ main()

diff --git a/examples/confild/resample.py b/examples/confild/resample.py

new file mode 100644

index 000000000..b6bb79202

--- /dev/null

+++ b/examples/confild/resample.py

@@ -0,0 +1,120 @@

+from abc import ABC, abstractmethod

+

+import numpy as np

+import paddle as th

+import paddle.distributed as dist

+

+

+def create_named_schedule_sampler(name, diffusion):

+ if name == "uniform":

+ return UniformSampler(diffusion)

+ elif name == "loss-second-moment":

+ return LossSecondMomentResampler(diffusion)

+ else:

+ raise NotImplementedError(f"unknown schedule sampler: {name}")

+

+

+class ScheduleSampler(ABC):

+

+ @abstractmethod

+ def weights(self):

+ """

+ Get a numpy array of weights, one per diffusion step.

+

+ The weights needn't be normalized, but must be positive.

+ """

+

+ def sample(self, batch_size):

+ w = self.weights()

+ p = w / np.sum(w)

+ indices_np = np.random.choice(len(p), size=(batch_size,), p=p)

+ indices = th.to_tensor(indices_np, dtype="int64")

+ weights_np = 1 / (len(p) * p[indices_np])

+ weights = th.to_tensor(weights_np, dtype="float32")

+ return indices, weights

+

+

+class UniformSampler(ScheduleSampler):

+ def __init__(self, diffusion):

+ self.diffusion = diffusion

+ self._weights = np.ones([diffusion.num_timesteps])

+

+ def weights(self):

+ return self._weights

+

+

+class LossAwareSampler(ScheduleSampler):

+ def update_with_local_losses(self, local_ts, local_losses):

+

+ batch_sizes = [

+ th.to_tensor([0], dtype=th.int32, place=local_ts.device)

+ for _ in range(dist.get_world_size())

+ ]

+ dist.all_gather(

+ batch_sizes,

+ th.to_tensor([len(local_ts)], dtype=th.int32, place=local_ts.device),

+ )

+

+ # Pad all_gather batches to be the maximum batch size.

+ batch_sizes = [x.item() for x in batch_sizes]

+ max_bs = max(batch_sizes)

+

+ timestep_batches = [th.zeros(max_bs).to(local_ts) for bs in batch_sizes]

+ loss_batches = [th.zeros(max_bs).to(local_losses) for bs in batch_sizes]

+ dist.all_gather(timestep_batches, local_ts)

+ dist.all_gather(loss_batches, local_losses)

+ timesteps = [

+ x.item() for y, bs in zip(timestep_batches, batch_sizes) for x in y[:bs]

+ ]

+ losses = [x.item() for y, bs in zip(loss_batches, batch_sizes) for x in y[:bs]]

+ self.update_with_all_losses(timesteps, losses)

+

+ @abstractmethod

+ def update_with_all_losses(self, ts, losses):

+ """

+ Update the reweighting using losses from a model.

+

+ Sub-classes should override this method to update the reweighting

+ using losses from the model.

+

+ This method directly updates the reweighting without synchronizing

+ between workers. It is called by update_with_local_losses from all

+ ranks with identical arguments. Thus, it should have deterministic

+ behavior to maintain state across workers.

+

+ :param ts: a list of int timesteps.

+ :param losses: a list of float losses, one per timestep.

+ """

+

+

+class LossSecondMomentResampler(LossAwareSampler):

+ def __init__(self, diffusion, history_per_term=10, uniform_prob=0.001):

+ self.diffusion = diffusion

+ self.history_per_term = history_per_term

+ self.uniform_prob = uniform_prob

+ self._loss_history = np.zeros(

+ [diffusion.num_timesteps, history_per_term], dtype=np.float64

+ )

+ self._loss_counts = np.zeros([diffusion.num_timesteps], dtype=np.int)

+

+ def weights(self):

+ if not self._warmed_up():

+ return np.ones([self.diffusion.num_timesteps], dtype=np.float64)

+ weights = np.sqrt(np.mean(self._loss_history**2, axis=-1))

+ weights /= np.sum(weights)

+ weights *= 1 - self.uniform_prob

+ weights += self.uniform_prob / len(weights)

+ return weights

+

+ def update_with_all_losses(self, ts, losses):

+ for t, loss in zip(ts, losses):

+ if self._loss_counts[t] == self.history_per_term:

+ # Shift out the oldest loss term.

+ self._loss_history[t, :-1] = self._loss_history[t, 1:]

+ self._loss_history[t, -1] = loss

+ else:

+ self._loss_history[t, self._loss_counts[t]] = loss

+ self._loss_counts[t] += 1

+

+ def _warmed_up(self):

+ return (self._loss_counts == self.history_per_term).all()

diff --git a/examples/confild/un_confild.py b/examples/confild/un_confild.py

new file mode 100644

index 000000000..eb9bd9e9a

--- /dev/null

+++ b/examples/confild/un_confild.py

@@ -0,0 +1,726 @@

+# Copyright (c) 2025 PaddlePaddle Authors. All Rights Reserved.

+

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+

+# http://www.apache.org/licenses/LICENSE-2.0

+

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import copy

+import enum

+import functools

+import math

+import os

+from abc import ABC, abstractmethod

+

+import hydra

+import matplotlib.pyplot as plt

+import numpy as np

+import paddle

+from omegaconf import DictConfig

+from resample import LossAwareSampler, UniformSampler

+

+from ppsci.arch import (

+ LossType,

+ ModelMeanType,

+ ModelVarType,

+ SIRENAutodecoder_film,

+ SpacedDiffusion,

+ UNetModel,

+)

+from ppsci.utils import logger

+

+

+def mean_flat(tensor):

+ return tensor.mean(axis=list(range(1, len(tensor.shape))))

+

+

+def normal_kl(mean1, logvar1, mean2, logvar2):

+ return 0.5 * (

+ -1.0

+ + logvar2

+ - logvar1

+ + paddle.exp(logvar1 - logvar2)

+ + ((mean1 - mean2) ** 2) * paddle.exp(-logvar2)

+ )

+

+

+def _extract_into_tensor(arr, timesteps, broadcast_shape):

+ res = paddle.to_tensor(arr, dtype=timesteps.dtype)[timesteps]

+ while len(res.shape) < len(broadcast_shape):

+ res = res[..., None]

+ return res.expand(broadcast_shape)

+

+

+train_losses = []

+valid_losses = []

+

+

+def create_model(

+ image_size,

+ num_channels,

+ num_res_blocks,

+ dims=2,

+ out_channels=1,

+ channel_mult=None,

+ learn_sigma=False,

+ class_cond=False,

+ use_checkpoint=False,

+ attention_resolutions="16",

+ num_heads=1,

+ num_head_channels=-1,

+ num_heads_upsample=-1,

+ use_scale_shift_norm=False,

+ dropout=0,

+ resblock_updown=False,

+ use_fp16=False,

+ use_new_attention_order=False,

+):

+ if channel_mult is None:

+ if image_size == 512:

+ channel_mult = (0.5, 1, 1, 2, 2, 4, 4)

+ elif image_size == 256:

+ channel_mult = (1, 1, 2, 2, 4, 4)

+ elif image_size == 128:

+ channel_mult = (1, 1, 2, 3, 4)

+ elif image_size == 64:

+ channel_mult = (1, 2, 3, 4)

+ else:

+ raise ValueError(f"unsupported image size: {image_size}")

+ else:

+ if isinstance(channel_mult, str):

+ channel_mult = tuple(int(ch_mult) for ch_mult in channel_mult.split(","))

+

+ attention_ds = []

+ for res in attention_resolutions.split(","):

+ attention_ds.append(image_size // int(res))

+

+ return UNetModel(

+ image_size=image_size,

+ in_channels=out_channels,

+ model_channels=num_channels,

+ out_channels=(out_channels if not learn_sigma else 2 * out_channels),

+ num_res_blocks=num_res_blocks,

+ attention_resolutions=tuple(attention_ds),

+ dropout=dropout,

+ channel_mult=channel_mult,

+ num_classes=(1000 if class_cond else None),

+ use_checkpoint=use_checkpoint,

+ use_fp16=use_fp16,

+ num_heads=num_heads,

+ num_head_channels=num_head_channels,

+ num_heads_upsample=num_heads_upsample,

+ use_scale_shift_norm=use_scale_shift_norm,

+ resblock_updown=resblock_updown,

+ use_new_attention_order=use_new_attention_order,

+ dims=dims,

+ )

+

+

+def get_named_beta_schedule(schedule_name, num_diffusion_timesteps):

+ if schedule_name == "linear":

+ scale = 1000 / num_diffusion_timesteps

+ beta_start = scale * 0.0001

+ beta_end = scale * 0.02

+ return np.linspace(

+ beta_start, beta_end, num_diffusion_timesteps, dtype=np.float64

+ )

+ elif schedule_name == "cosine":

+ return betas_for_alpha_bar(

+ num_diffusion_timesteps,

+ lambda t: math.cos((t + 0.008) / 1.008 * math.pi / 2) ** 2,

+ )

+ else:

+ raise NotImplementedError(f"unknown beta schedule: {schedule_name}")

+

+

+def betas_for_alpha_bar(num_diffusion_timesteps, alpha_bar, max_beta=0.999):

+ betas = []

+ for i in range(num_diffusion_timesteps):

+ t1 = i / num_diffusion_timesteps

+ t2 = (i + 1) / num_diffusion_timesteps

+ betas.append(min(1 - alpha_bar(t2) / alpha_bar(t1), max_beta))

+ return np.array(betas)

+

+

+def space_timesteps(num_timesteps, section_counts):

+ if isinstance(section_counts, str):

+ if section_counts.startswith("ddim"):

+ desired_count = int(section_counts[len("ddim") :])

+ for i in range(1, num_timesteps):

+ if len(range(0, num_timesteps, i)) == desired_count:

+ return set(range(0, num_timesteps, i))

+ raise ValueError(

+ f"cannot create exactly {num_timesteps} steps with an integer stride"

+ )

+ section_counts = [int(x) for x in section_counts.split(",")]

+ size_per = num_timesteps // len(section_counts)

+ extra = num_timesteps % len(section_counts)

+ start_idx = 0

+ all_steps = []

+ for i, section_count in enumerate(section_counts):

+ size = size_per + (1 if i < extra else 0)

+ if size < section_count:

+ raise ValueError(

+ f"cannot divide section of {size} steps into {section_count}"

+ )

+ if section_count <= 1:

+ frac_stride = 1

+ else:

+ frac_stride = (size - 1) / (section_count - 1)

+ cur_idx = 0.0

+ taken_steps = []

+ for _ in range(section_count):

+ taken_steps.append(start_idx + round(cur_idx))

+ cur_idx += frac_stride

+ all_steps += taken_steps

+ start_idx += size

+ return set(all_steps)

+

+

+def create_gaussian_diffusion(

+ *,

+ steps=1000,

+ learn_sigma=False,

+ sigma_small=False,

+ noise_schedule="linear",

+ use_kl=False,

+ predict_xstart=False,

+ rescale_timesteps=False,

+ rescale_learned_sigmas=False,

+ timestep_respacing="",

+):

+ betas = get_named_beta_schedule(noise_schedule, steps)

+ if use_kl:

+ loss_type = LossType.RESCALED_KL

+ elif rescale_learned_sigmas:

+ loss_type = LossType.RESCALED_MSE

+ else:

+ loss_type = LossType.MSE

+ if not timestep_respacing:

+ timestep_respacing = [steps]

+ return SpacedDiffusion(

+ use_timesteps=space_timesteps(steps, timestep_respacing),

+ betas=betas,

+ model_mean_type=(

+ ModelMeanType.EPSILON if not predict_xstart else ModelMeanType.START_X

+ ),

+ model_var_type=(

+ (ModelVarType.FIXED_LARGE if not sigma_small else ModelVarType.FIXED_SMALL)

+ if not learn_sigma

+ else ModelVarType.LEARNED_RANGE

+ ),

+ loss_type=loss_type,

+ rescale_timesteps=rescale_timesteps,

+ )

+

+

+def load_elbow_flow(path):

+ return np.load(f"{path}")[1:]

+

+

+def load_channel_flow(

+ path,

+ t_start=0,

+ t_end=1200,

+ t_every=1,

+):

+ return np.load(f"{path}")[t_start:t_end:t_every]

+

+

+def load_periodic_hill_flow(path):

+ data = np.load(f"{path}")

+ return data

+

+

+def load_3d_flow(path):

+ data = np.load(f"{path}")

+ return data

+

+

+class Normalizer_ts(object):

+ def __init__(self, params=[], method="-11", dim=None):

+ self.params = params

+ self.method = method

+ self.dim = dim

+

+ def fit_normalize(self, data):

+ assert type(data) == paddle.Tensor

+ if len(self.params) == 0:

+ if self.method == "-11" or self.method == "01":

+ if self.dim is None:

+ self.params = paddle.max(x=data), paddle.min(x=data)

+ else:

+ self.params = (

+ paddle.max(keepdim=True, x=data, axis=self.dim),

+ paddle.argmax(keepdim=True, x=data, axis=self.dim),

+ )[0], (

+ paddle.min(keepdim=True, x=data, axis=self.dim),

+ paddle.argmin(keepdim=True, x=data, axis=self.dim),

+ )[

+ 0

+ ]

+ elif self.method == "ms":

+ if self.dim is None:

+ self.params = paddle.mean(x=data, axis=self.dim), paddle.std(

+ x=data, axis=self.dim

+ )

+ else:

+ self.params = paddle.mean(

+ x=data, axis=self.dim, keepdim=True

+ ), paddle.std(x=data, axis=self.dim, keepdim=True)

+ elif self.method == "none":

+ self.params = None

+ return self.fnormalize(data, self.params, self.method)

+

+ def normalize(self, new_data):

+ if not new_data.place == self.params[0].place:

+ self.params = self.params[0], self.params[1]

+ return self.fnormalize(new_data, self.params, self.method)

+

+ def denormalize(self, new_data_norm):

+ if not new_data_norm.place == self.params[0].place:

+ self.params = self.params[0], self.params[1]

+ return self.fdenormalize(new_data_norm, self.params, self.method)

+

+ def get_params(self):

+ if self.method == "ms":

+ print("returning mean and std")

+ elif self.method == "01":

+ print("returning max and min")

+ elif self.method == "-11":

+ print("returning max and min")

+ elif self.method == "none":

+ print("do nothing")

+ return self.params

+

+ @staticmethod

+ def fnormalize(data, params, method):

+ if method == "-11":

+ return (data - params[1]) / (params[0] - params[1]) * 2 - 1

+ elif method == "01":

+ return (data - params[1]) / (params[0] - params[1])

+ elif method == "ms":

+ return (data - params[0]) / params[1]

+ elif method == "none":

+ return data

+

+ @staticmethod

+ def fdenormalize(data_norm, params, method):

+ if method == "-11":

+ return (data_norm + 1) / 2 * (params[0] - params[1]) + params[1]

+ elif method == "01":

+ return data_norm * (params[0] - params[1]) + params[1]

+ elif method == "ms":

+ return data_norm * params[1] + params[0]

+ elif method == "none":

+ return data_norm

+

+

+def create_slim(cfg):

+ ###### read data - fois ######

+ if cfg.CNF.load_data_fn == "load_3d_flow":

+ fois = load_3d_flow(cfg.CNF.data_path)

+ elif cfg.CNF.load_data_fn == "load_elbow_flow":

+ fois = load_elbow_flow(cfg.CNF.data_path)

+ elif cfg.CNF.load_data_fn == "load_channel_flow":

+ fois = load_channel_flow(cfg.CNF.data_path)

+ elif cfg.CNF.load_data_fn == "load_periodic_hill_flow":

+ fois = load_periodic_hill_flow(cfg.CNF.data_path)

+ else:

+ fois = np.load(cfg.CNF.data_path)

+

+ spatio_shape = fois.shape[1:-1]

+

+ ###### read data - coordinate ######

+ if cfg.CNF.coor_path is None:

+ coord = [np.linspace(0, 1, i) for i in spatio_shape]

+ coord = np.stack(np.meshgrid(*coord, indexing="ij"), axis=-1)

+ else:

+ coord = np.load(cfg.CNF.coor_path)

+ coord = coord.astype("float32")

+ fois = fois.astype("float32")

+

+ ###### convert to tensor ######

+ fois = paddle.to_tensor(fois) if not isinstance(fois, paddle.Tensor) else fois

+ coord = paddle.to_tensor(coord) if not isinstance(coord, paddle.Tensor) else coord

+ N_samples = fois.shape[0]

+

+ ###### normalizer ######

+ in_normalizer = Normalizer_ts(**cfg.CNF.normalizer)

+ out_normalizer = Normalizer_ts(**cfg.CNF.normalizer)

+ norm_params = paddle.load(cfg.CNF.normalizer_params_path)

+ in_normalizer.params = norm_params["x_normalizer_params"]

+ out_normalizer.params = norm_params["y_normalizer_params"]

+

+ cnf_model = SIRENAutodecoder_film(**cfg.CNF.CONFILD)

+

+ return cnf_model, in_normalizer, out_normalizer, coord

+

+

+def dl_iter(dl):

+ while True:

+ yield from dl

+

+

+def train(cfg):

+ batch_size = cfg.TRAIN.batch_size

+ test_batch_size = cfg.TRAIN.test_batch_size

+ ema_rate = cfg.TRAIN.ema_rate

+ ema_rate = (

+ [ema_rate]

+ if isinstance(ema_rate, float)

+ else [float(x) for x in ema_rate.split(",")]

+ )

+

+ lr_anneal_steps = cfg.TRAIN.lr_anneal_steps

+ final_lr = cfg.TRAIN.final_lr

+ step = 0

+ resume_step = 0

+ microbatch = cfg.TRAIN.microbatch if cfg.TRAIN.microbatch > 0 else batch_size

+

+ train_data = np.load(cfg.DATA.train_data)

+ valid_data = np.load(cfg.DATA.valid_data)

+ print(

+ f"Train data shape: {train_data.shape}, range: [{train_data.min():.3f}, {train_data.max():.3f}]"

+ )

+ print(

+ f"Valid data shape: {valid_data.shape}, range: [{valid_data.min():.3f}, {valid_data.max():.3f}]"

+ )

+

+ max_val, min_val = np.max(train_data, keepdims=True), np.min(

+ train_data, keepdims=True

+ )

+ norm_train_data = -1 + (train_data - min_val) * 2.0 / (max_val - min_val)

+ norm_valid_data = -1 + (valid_data - min_val) * 2.0 / (max_val - min_val)

+

+ print(

+ f"After normalization: train range: [{norm_train_data.min():.3f}, {norm_train_data.max():.3f}]"

+ )

+

+ norm_train_data = paddle.to_tensor(norm_train_data[:, None, ...])

+ norm_valid_data = paddle.to_tensor(norm_valid_data[:, None, ...])

+

+ dl_train = dl_iter(

+ paddle.io.DataLoader(

+ paddle.io.TensorDataset(norm_train_data),

+ batch_size=batch_size,

+ shuffle=True,

+ )

+ )

+ dl_valid = dl_iter(

+ paddle.io.DataLoader(

+ paddle.io.TensorDataset(norm_valid_data),

+ batch_size=test_batch_size,

+ shuffle=True,

+ )

+ )

+

+ unet_model = create_model(

+ image_size=cfg.UNET.image_size,

+ num_channels=cfg.UNET.num_channels,

+ num_res_blocks=cfg.UNET.num_res_blocks,

+ num_heads=cfg.UNET.num_heads,

+ num_head_channels=cfg.UNET.num_head_channels,

+ attention_resolutions=cfg.UNET.attention_resolutions,

+ channel_mult=cfg.UNET.channel_mult,

+ )

+ print(

+ f"Model created with {sum(p.numel() for p in unet_model.parameters()):,} parameters"

+ )

+

+ diff_model = create_gaussian_diffusion(

+ steps=cfg.Diff.steps, noise_schedule=cfg.Diff.noise_schedule

+ )

+ print(

+ f"Diffusion model created with {cfg.Diff.steps} steps, noise schedule: {cfg.Diff.noise_schedule}"

+ )

+

+ opt = paddle.optimizer.AdamW(

+ parameters=unet_model.parameters(),

+ learning_rate=cfg.TRAIN.lr,

+ weight_decay=cfg.TRAIN.weight_decay,

+ )

+ print(

+ f"Optimizer initialized with lr={cfg.TRAIN.lr}, weight_decay={cfg.TRAIN.weight_decay}"

+ )

+

+ schedule_sampler = UniformSampler(diff_model)

+

+ ema_params = []

+ for _ in range(len(ema_rate)):

+ ema_param_dict = {}

+ for name, param in unet_model.named_parameters():

+ ema_param_dict[name] = copy.deepcopy(param.detach())

+ ema_params.append(ema_param_dict)

+

+ global train_losses, valid_losses

+ train_losses.clear()

+ valid_losses.clear()

+

+ valid_interval = 50

+ max_steps = cfg.TRAIN.max_steps if hasattr(cfg.TRAIN, "max_steps") else 10000

+ print(

+ f"Starting training with max_steps={max_steps}, lr_anneal_steps={lr_anneal_steps}"

+ )

+

+ while step + resume_step < max_steps:

+ cond = {}

+ train_batch = next(dl_train)

+

+ unet_model.train()

+ opt.clear_grad()

+

+ step_losses = []

+

+ for i in range(0, len(train_batch), microbatch):

+ micro = train_batch[i : i + microbatch]

+ micro_cond = {k: v[i : i + microbatch] for k, v in cond.items()}

+

+ t, weights = schedule_sampler.sample(len(micro))

+

+ compute_losses = functools.partial(

+ diff_model.training_losses,

+ unet_model,

+ paddle.stack(micro),

+ t,

+ model_kwargs=micro_cond,

+ )

+ losses = compute_losses()

+

+ if isinstance(schedule_sampler, LossAwareSampler):

+ schedule_sampler.update_with_local_losses(t, losses["loss"].detach())

+

+ loss = (losses["loss"] * weights).mean()

+

+ if step == 0 and i == 0:

+ print(

+ f"First loss computation - loss: {loss.item():.6f}, losses keys: {list(losses.keys())}"

+ )

+ if "mse" in losses:

+ print(f"MSE loss: {losses['mse'].mean().item():.6f}")

+ if "vb" in losses:

+ print(f"VB loss: {losses['vb'].mean().item():.6f}")

+

+ step_losses.append(loss.item())

+

+ if i == 0:

+ log_loss_dict(

+ diff_model,

+ t,

+ {

+ k: v * weights

+ for k, v in losses.items()

+ if isinstance(v, paddle.Tensor)

+ },

+ is_valid=False,

+ )

+

+ loss.backward()

+

+ paddle.nn.utils.clip_grad_norm_(unet_model.parameters(), max_norm=1.0)

+

+ grad_norm, param_norm = _compute_norms(unet_model)

+ opt.step()

+

+ if step_losses:

+ avg_step_loss = sum(step_losses) / len(step_losses)

+ train_losses.append(avg_step_loss)

+

+ if step % 50 == 0:

+ current_lr = opt.get_lr()

+ print(

+ f"Step {step}: Loss={avg_step_loss:.6f}, GradNorm={grad_norm:.6f}, ParamNorm={param_norm:.6f}, LR={current_lr:.2e}"

+ )

+

+ _update_ema(ema_rate, ema_params, unet_model)

+

+ if lr_anneal_steps is not None and lr_anneal_steps != 0:

+ _anneal_lr(lr_anneal_steps, step, resume_step, opt, final_lr, cfg.TRAIN.lr)

+

+ step += 1

+

+ if step % valid_interval == 0:

+ unet_model.eval()

+ with paddle.no_grad():

+ valid_batch = next(dl_valid)

+ all_valid_losses = []

+

+ for i in range(0, len(valid_batch), microbatch):

+ micro = valid_batch[i : i + microbatch]

+ micro_cond = {k: v[i : i + microbatch] for k, v in cond.items()}

+

+ t, weights = schedule_sampler.sample(len(micro))

+

+ compute_losses = functools.partial(

+ diff_model.training_losses,

+ unet_model,

+ paddle.stack(micro),

+ t,

+ model_kwargs=micro_cond,

+ valid=True,

+ )

+ losses = compute_losses()

+

+ if "loss" in losses:

+ all_valid_losses.append(

+ (losses["loss"] * weights).mean().item()

+ )

+

+ if len(all_valid_losses) > 0:

+ avg_valid_loss = sum(all_valid_losses) / len(all_valid_losses)